Saturday Feb 14, 2026

Saturday Feb 14, 2026

Wednesday, 28 August 2024 00:20 - - {{hitsCtrl.values.hits}}

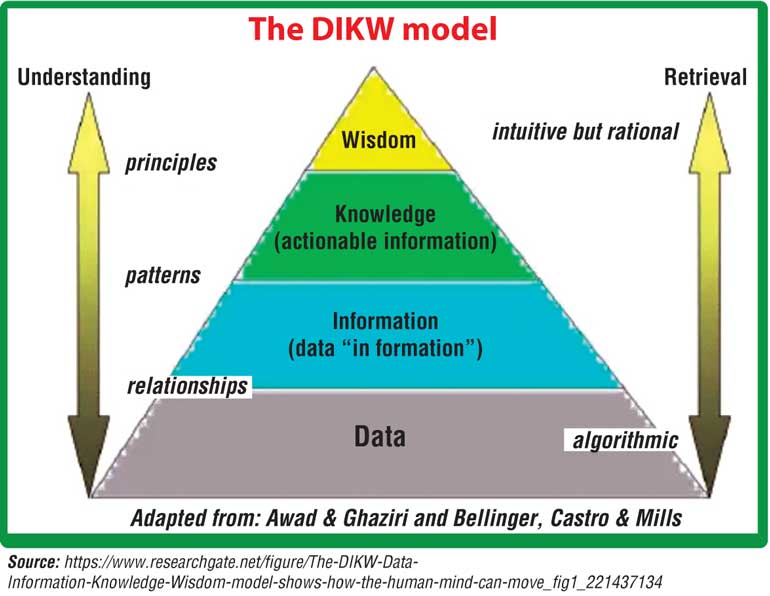

Information is how we form views and opinions which lead to knowledge and wisdom. So how we get information and what information we receive matters as that defines who we are.

Information is how we form views and opinions which lead to knowledge and wisdom. So how we get information and what information we receive matters as that defines who we are.

Information is now cheap and plentiful

For thousands of years information was hard to come by. The first revolution of course was the printing press. Long form content in the form of books took off with that. Time sensitive content delivered in the form of newspapers helped keep us informed. (If you’re old enough you will remember the “Newsreel” that was shown right before a movie started — another distribution channel for timely content.)

With the information technology revolution, content creation became democratised: We don’t need to be “a writer” and be selected by someone else to publish. We don’t need money to publish. We don’t need permission to publish. I can write whatever I want in this blog or on X or LinkedIn or Facebook or whatever. I can put pretty much do anything I want in a video and post it on YouTube or TikTok.

Today we are also in the era of generative AI where instead of humans, AI can create content. The jury is still out on whether AI created content is truly creative (and not just regurgitative) but it certainly can create cool content in any media now.

This is an incredible impact of the information technology revolution we have lived through in the last 50+ years.

Of course the challenge now is how do you get anyone to experience your content.

Content distribution

In the old days we had to buy books, go to a library, buy newspapers/magazines/movies etc. to get to content. The digital era changed that to so that anyone can get to content easily.

Today we’re at the other end of the spectrum: Instead of information being hard to come by, all of human information/knowledge/wisdom is available to most people at their fingertips. Time sensitive information (“breaking news”) is shoved at us instantly instead of at 6 p.m. or 6 a.m. or at the top of the hour. All historical video content is available on demand.

With that explosion of available content, the question is who (or what) decides what content I will experience. More and more, it is not me.

Algorithmic content selection

Newspapers and their digital cousins (websites, apps, video channels) have some responsible person with a societally recognised role (the editor) selecting what gets shown and what gets highlighted. That is also what leads to a particular orientation for those platforms— CNN is left-leaning, anti-Trump, pro-Kamala, pro-US, anti-China, pro-war etc.; Foxnews is right leaning (and more); NYTimes is like CNN.

Thus, some team of human beings are choosing what I see when I go to their site. And I chose to go to their site (or their app) knowing their orientation. Those people are probably doing data-driven analysis to decide what words to use and what images to highlight etc., but CNN, for example, will not show you the horror that Israel delivered to Palestinian people last week (and yesterday and the week before) in full glory because that’s against their orientation. A human being is deciding not to show that whereas an algorithm would know that will get views and would show it if it was optimising for numbers.

Where do we get our information now?

I regularly go to the main global news sites (CNN, BBC, Al Jazeera etc.) to see whether something major has happened that I missed. However, more and more, most of the information I get is from an app: primarily X for news / politics / some tech and LinkedIn for more professional content.

But I find that most of the time I go to the “For you” feed of X now vs. the feed from people I follow. Why? Because that feed is designed to reinforce my beliefs and keep me scrolling down (and reposting) which further reinforces my beliefs and keep me doing it more. I’m hooked.

TikTok of course cracked this first. Now everyone has an algorithm that delivers content that the system thinks will keep me scrolling. That’s X, YouTube, Facebook, LinkedIn and more. Some algorithms are better than others which gives them more eyeball time, more time to throw ads at me and more time for dopamine hits for me. All of that translates to more money for the platform and more narrowing and controlling of my views.

Algorithmic content selection is to information distribution what algorithmic trading is to market trading. One is focused on making more money, the other on shaping societal views and beliefs.

Threat to society and democracy

The wonderful information technology revolution is now unfortunately a threat to society in terms of how information itself is discovered and consumed.

AI created content is often mentioned as the threat to democracy but it seems more and more that the algorithms are a bigger risk. In the days of newspapers and websites, there was an editorial team and they chose what to show me. Journalism was about covering the whole story, not just the part that your beliefs are telling you to read. Of course real journalism too is dead for the most part because mostly people write what they get paid to write. But importantly, I know from their orientation what that twist is.

Now the algorithm chooses what to show me. I see more of the same and I get reinforced thinking that must be the only fact. The algorithm can keep me going down a rabbit hole forever. The algorithm doesn’t need to, and certainly does not want to, practice journalism and give me multiple sides of a story.

The worst part is that what I see is not what you will see. At one end of the spectrum that’s personalisation and that’s great — just let me see what I like to see. But when it’s a system reprogramming individuals, it is now breaking society because each person is being programmed differently. Individualism is wonderful, but “society” by definition needs some alignment. With individualised programing, we no longer have an aligned society.

Yuval Hariri’s book Sapiens talks about the power of the narrative: Having a story is how you control societies and populations.

We’ve let algorithms write our story. By traversing the “For You” feed, we have given the power of the narrative to the algorithm.

Shut down the algorithms

The democratisation of information creation is a fantastic thing. Free speech is a wonderful thing.

But we don’t need to let an algorithm that optimises for money or for influence control us. Algorithms have their own biases, either from how they were designed/trained or by the will of the operators. For example, if you criticise Christianity or Islam or Buddhism on X you will not get a warning but if you criticise Judaism you will. The warning means your content will not get shown and just like that you might as well not write it because it will not show up in anyone’s “For You” feed. No one is accountable for these biases or influences because its just the “algorithm”. And unlike human selection, the algorithm will keep me going down the rabbit hole that someone else chose essentially forever.

At that point, my story is written. My beliefs are altered. I have been taken over.

If we shut down the “For You” feeds then we go back to having to earn the right to go viral with “good” content that goes viral on its own, not because an algorithm to show it in the first 10 things I see in the morning. “Good” is defined under my control because I will see things from creators that I chose to follow.

Messaging apps don’t (yet) have algorithms — WhatsApp or Signal or Telegram still requires me to go sign up to what I want to read / watch / listen (via groups) or someone needs to explicitly send it to me. Content goes viral on those apps because users explicitly share them with other users.

I believe that giving up the future of human society to algorithms is an existential threat to societal development. It is time for us to take control of our narrative: Shut down the algorithms.

Not all bad?

But algorithms are certainly not all bad. For example, today on X, I was presented with this beautiful thread:

I probably would not have run into this lovely thread if not for the algorithm showing it to me. And it showed it to me because I’ve read other similar threads by other people. It knows me.

Algorithmic content selection for areas of interest is useful. However, algorithmic content selection for topics like politics, current news and anything else that would’ve found its way to a good old newspaper is very dangerous given the potential to strongly orient me in a certain way.

Defining that boundary is hard.

While all algorithmic content is certainly not all bad, the damage it is doing to society demands that we shut it down now.

We don’t need to stop social media or free speech — let people say/write/video whatever they want and let them earn their visibility and distribution through the recognition others give to that content. I propose that Governments across the world ban the “For You” feeds of all these apps and force the companies to remove that feature. The companies can still make money and produce useful products and we can all continue creating content and expressing thoughts freely.

After we shut them down, we can continue to work on ways to apply algorithms to improve information discovery and propagation. (Source: https://medium.com/@sanjiva.weerawarana/time-to-shutdown-the-algorithms-7ab6fd10ba9e)